By Eric Schafer

Simulation Architect at Iris Automation

Like human pilots, artificial intelligence autopilots practice not crashing in a simulator. Having transitioned from many years of movie visual effects (crashing encouraged) at Industrial Light & Magic to simulation in the Aerospace industry (crashing frowned upon) at NASA Ames and now Iris, my expectations for simulator realism are high (translation: not commercially available). Engineering a low-altitude flight simulator at Iris has been a collaborative challenge, fusing our collective visual effects, aerospace, and geographic information system (GIS) skills. But why not just train AI on real data from the real world?

When Deep Learning is your AI performance enhancer of choice, you get to know the side effects. The main one is over-fitting, the system learning a few examples well but unable to generalize. It’s like cheating on the test to pass now but fail later: Bad AI, bad, bad! Over-fitting typically isn’t the result of not learning all of the available data, rather it’s a symptom of not having enough data in the first place.

And real-world data is hard to get, especially examples that would summon Federal Aviation Administration (FAA) investigators. So, when you really need a steaming pile of Big Data, don’t give up on AI, use a simulator to cook up fake data, all you can eat. However, before taking on the challenge of building a simulator, what if another Deep Learning model had already been trained on similar data? Could happen.

Transfer Learning is another over-the-counter remedy for overfitting which lets you reuse what was learned from other related data– it’s especially valuable if that related data is from the real world! With so many PhDs spawned by Transfer Learning, it’s worth digressing into why a simulator wins.

Transfer Learning: A Bad Analogy

The other day, a group of little kids inflicted on me the song Chattanooga Choo Choo. I practically had to seek therapy to get that out of my head. But it got me thinking about training AI with Transfer Learning.

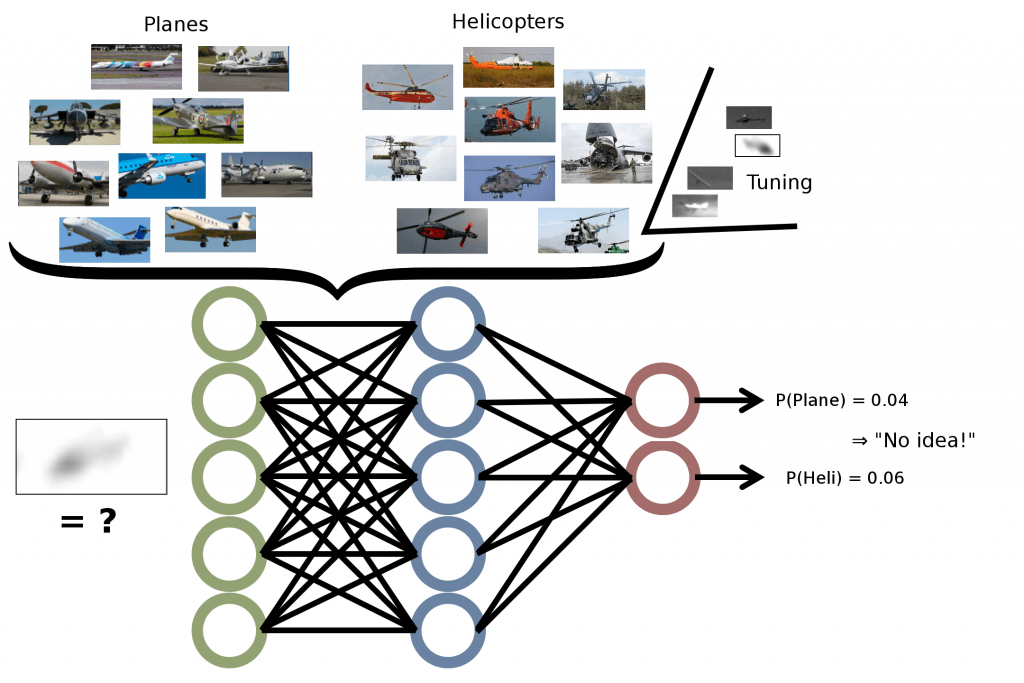

Transfer Learning is the transfer of encoded knowledge from a related conditional probability distribution to a new one specific to your domain. It is popular in cases where you do not have enough training data for standard Supervised Machine Learning to generalize, BUT someone else already trained a Deep Learning model on a lot of sorta kinda similar data.

Transfer Learning is a pail of fail when your input is much different from what trained the transferred model.

It’s like that one summer in college when I was a singing telegram and learned oldies like “Chattanooga Choo Choo” with the words swapped out for some sardonic birthday incantation. I kinda knew the tune but had to learn new words. At first, I sucked so badly that a customer asked for their money back. Okay, bad analogy. But eventually I got the new lyrics down. One time I serenaded this petrified kid in a wheelchair in front of fifty of his closest summer camp friends, who thought I was the funniest thing in the known universe. Okay, good analogy.

In other words, Transfer Learning is turning AI that is a bad analogy into a good one. If you are lucky enough to find a neural net model trained on data similar to what you wish you had, Transfer Learning can save your life (at least your AI’s life). But that’s the problem, especially in our domain of low-altitude aircraft, Deep Learning models trained in the same context are pretty much the empty set. Image recognition challenges, for example, generally classify stunning close-up photographs of planes, not fuzzy blobs on the horizon. And transferring from only loosely-related models doesn’t really work.

Like later that summer, I sang to a lady dying of lung cancer on her final birthday. The “Chattanooga Choo Choo” birthday lyrics seemed so incongruous, / “You’re not getting old, you’re getting better. /” She mustered a smile of irony and one last puff of laughter, as if to say thanks, but that’s not funny. Okay, bad analogy.

Synthetic Data: Simulator Yo!

A simulator makes fake data. Harsh. “Synthetic data” softens it a bit; but hang on, in addition to faking safe “nominal” situations like a plane in the distance, a simulator gets to cover the drama: near mid-air collisions, too many planes, crazy pilots, vomitous turbulence, smoke, engine failure, sensor failure, camera problems–edge cases that are too dangerous or illegal to test in the field. As with a human pilot, the more training an AI test pilot has in dangerous situations, the safer it will be in the real world.

For computer vision purposes, simulation also needs to look photorealistic! What’s the difference between a simulator and an action movie? One has a plot and the other has a theater. It is no coincidence that making a simulator for training computer vision sounds like movie visual effects. (It is a coincidence that two of us at Iris have credits on Pirates of the Caribbean 3.)

The composition of the “shots” is what makes the resulting synthetic data valuable: how aircraft come into frame, the environment, lighting, atmosphere, lens flare, camera vibration, timing. In a movie, these elements are used to advance the story; in a simulator, they are used to train AI autopilots not to crash. One difference that makes the simulator more of a psychological thriller is that, unlike a movie ending when the credits roll, Synthetic Data: The Motion Picture ends with the trained AI going out into the real world and flying without causing any “special effects” on other aircraft.

A low-altitude airspace simulation within one of Iris’ testing environments near Reno, Nevada.

Keep it real.

Even if simulated data looks photorealistic, real-world data is always more, well, realistic. The best of both worlds is to use both synthetic and real data. There are many ways to mix the two: train with sim data and validate with real data, or train and validate with some ratio of sim and real data, or train with all synthetic data and refine the model with real data.

Say some AI model was trained to be a ninja in a simulator for 10 years in a mountain temple. You want a model trained in the real world but only have a coupla real examples. Retrain that sim ninja model on your precious real-world data, then watch it kick some ass.

“I know you like music; here is a gift.”–Ninja Tune

However you remix it, a simulator helps you fake it ‘til you make it! At Iris, we train and test with a mixture of all the safe real data we can get–from our Iris Flight Operations team in Reno, American and Canadian Integration Pilot Programs, Early Adopter Customers–along with all the synthetic data we can handle from our simulator. The more realistic it is, the safer we’ll fly when it starts to get real.