by Eric Schafer

Technology that helps a vehicle not crash is called a Collision Avoidance System (CAS). In cars, a CAS is part of an Advanced Driver Assistance System (ADAS), providing automatic braking and lane keeping, while alerting the driver about surrounding traffic. In an aircraft, a CAS is part of an avionics package which alerts the pilot of nearby planes and how to avoid them.

Collision Avoidance Systems share two common components: sensor suite and compute. The choice of sensors depends on the type of vehicle and the environment. For cars, LIDAR is a common choice, since it provides accurate short-range distance to things around the vehicle. For ships, RADAR makes sense for long-distance visibility through fog. Under water, SONAR makes sense. GPS makes sense on land but not under water or in space, and so on. The other common component in CAS technology is compute, the mass noun that means computing power plus the kind of software that runs on it—perception software that interprets sensors to see the world, in this case. With this increased autonomy, the overall trend in CAS technology is to move away from providing a human enough situational awareness to react in time (slow), and toward automatically reacting autonomously (fast). For a broader industry perspective on collision avoidance in aviation, check out our article, “Detect-and-Avoid: How Airborne Collision Avoidance Works.”

In fully Autonomous Vehicles (AVs), whether flying or ground-based, there is no human in the loop. The CAS needs to work extremely well, as there is no driver to take the wheel, no pilot to take the stick. Rather than simply slamming on the brakes, an autonomous car needs the intelligence to be able to steer around obstacles. Likewise, an autonomous aircraft needs to be able to maneuver out of a potential collision. We are witnessing an extraordinary increase in on-board autonomy, using Artificial Intelligence (AI) techniques such as Deep Learning to improve CAS technology.

The subset of autonomous aircraft called drones or Unmanned Aerial Vehicles (UAVs) poses extra challenges, however. The Size, Weight, and Power (SWAP) of a CAS onboard a drone is much more restricted than on a plane. Considering size, large cameras and electronics mean the drone may not fly as nimbly. With more weight, less payload can fly. With more power, less flying time is available, and larger batteries mean more weight.

As a result, a drone CAS must be simplified down to two functions at which it must excel:

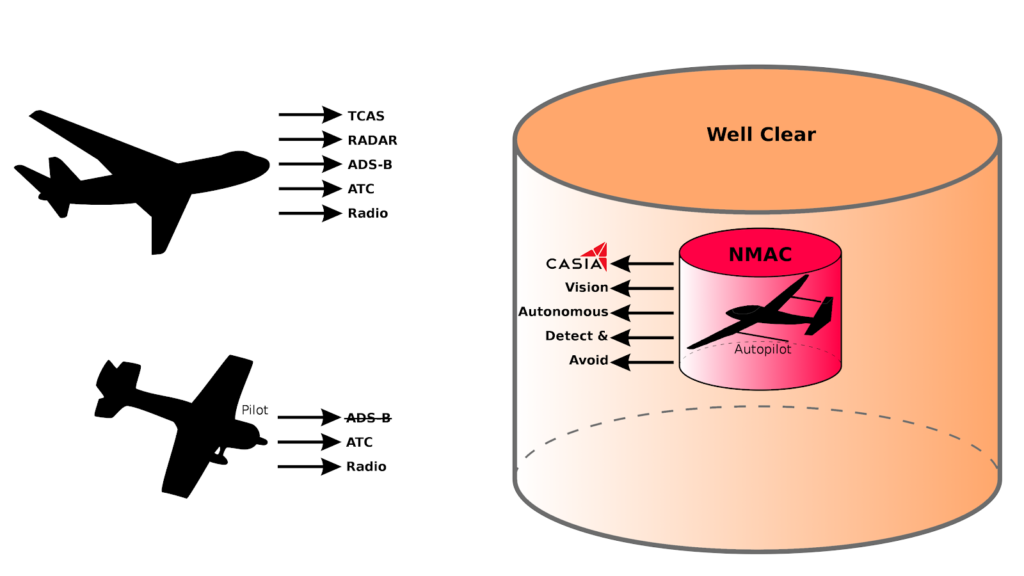

Detect and Avoid (DAA). The detect part means being able to spot other aircraft, often against a background of hills, and estimate the probability of danger far enough in advance that the drone has time to maneuver back to safety. The avoid part means getting out of the way–not merely before a possible impact, but before reaching a radius the FAA calls Near Mid-Air Collision (NMAC)–within which a serious investigation and grounding of the aircraft would result. Better yet, the CAS should allow the drone to avoid a Well Clear Breach, a much farther range which is the standard margin of safe separation between aircraft.

Practicality

Note that other pilots can barely see drones even when they know where to look, so the onus is on the drone to get out of the way. Also note that the FAA has mandated that the onus is on manned aircraft to broadcast where they are, using a radio beacon called Automatic DependentSurveillance-Broadcast (ADS-B), however that hasn’t happened; most aircraft still don’t have it. So while the ability to receive ADS-B does help detect some aircraft, most potential collisions require that the drone be able to see. That means good cameras and other sensors, plenty of computing power, and batteries to keep up.

It’s useful to compare CAS on general aviation aircraft (jets, small planes, helicopters) to CAS on drones to realize how important it is for a drone to detect those visually. Jets or planes with more than 30 seats, or over 16.5 tons, have a Traffic Alert and Collision Avoidance System (TCAS) on board. This standard equipment first assumes that other aircraft worth avoiding have it too. First a large plane sends out a “squawk” that identifies the plane and reports its approximate altitude, to the nearest 100 feet. Then another aircraft (large or small plane or a helicopter) receives this information that there is a large plane somewhere around it at roughly the same altitude. If the other aircraft’s RADAR detects an aircraft close to the same altitude, TCAS assumes that must be it, then alerts the pilot–situational awareness–and advises whether to climb or descend.

Think about this from an autonomous drone’s perspective. Can we assume all other aircraft have TCAS (or any other CAS, for that matter)? No, plus flying below 400 feet Above Ground Level (AGL) and away from airports, a drone is more likely to encounter a small aircraft without a CAS than some commercial airliner. Even if the other aircraft does have a transponder that announces itself, can the drone use RADAR to find it? No, RADAR is too power-hungry for drones other than large (e.g., military) drones, and less aerodynamic too. And even if the other aircraft does report altitude (via TCAS or ADS-B), possibly being off by 100 feet vertically while we are flying below 400 feet can mean the difference between maneuvering out of the way and accidentally getting in the way.

The Vision

Drones need vision for Detect and Avoid (DAA); the old “cooperative” systems just don’t work for drones and small planes in low-altitude airspace. Iris Automation’s Casia uses cameras and computer vision onboard an autonomous drone to alert and trigger an avoidance maneuver to steer clear of a possible NMAC. Casia also listens for ADS-B locations and altitudes of cooperative aircraft, but the real purpose of Casia is to always be on the lookout for other non-cooperative aircraft heading our way and react autonomously– because if a plane is closing in fast on a drone, we cannot afford to simply alert a human drone operator and wait for their emergency response. Whereas TCAS was designed to give airline pilots a couple of minutes warning, spotting a small plane heading blindly toward a drone at 150 knots gives it mere seconds to scoot.

When autonomous drones can stay well clear of manned aircraft, not needing a human in the loop means improved safety: immediate reaction time, always on, and never tired or distracted. Yet this is not the main benefit. Being able to autonomously avoid NMACs means the drone can be trusted to fly Beyond Visual Line of Sight (BVLOS). No longer are drone operations restricted to the squinting distance of a human; they are only restricted by the imagination of a new business plan.

References

[1] Introduction to TCAS II https://www.faa.gov/documentlibrary/media/advisory_circular/tcas%20ii%20v7.1%20intro%20booklet.pdf

[2] Everything You Need to Know About Mode C Transponders https://www.flyingmag.com/everything-you-need-to-know-about-mode-c-transponders/

[3] Uncorrelated Encounter Model of the National Airspace System https://www.ll.mit.edu/sites/default/files/publication/doc/2018-12/Kochenderfer_2008_ATC-345_WW-18178.pdf

[4] Ship Collision Avoidance Methods: State-of-the-Art https://www.researchgate.net/publication/336115193_Ship_collision_avoidance_methods_State-of-the-art